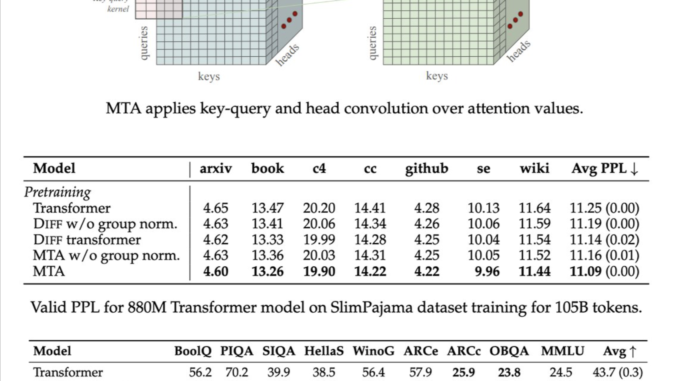

Meta AI Proposes Multi-Token Attention (MTA): A New Attention Method which Allows LLMs to Condition their Attention Weights on Multiple Query and Key Vectors

Large Language Models (LLMs) significantly benefit from attention mechanisms, enabling the effective retrieval of contextual information. Nevertheless, traditional attention methods primarily depend on single token attention, where each attention weight is computed from a single […]