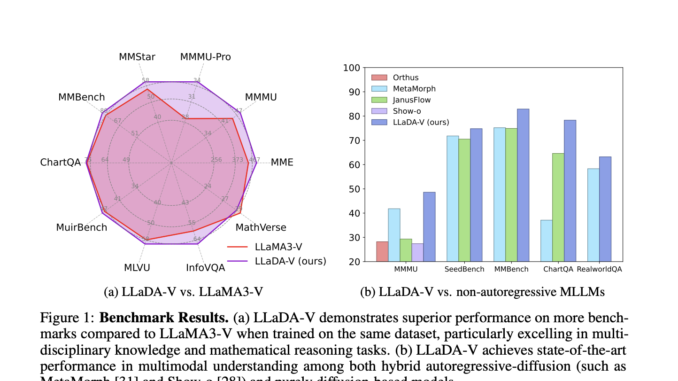

This AI Paper Introduces LLaDA-V: A Purely Diffusion-Based Multimodal Large Language Model for Visual Instruction Tuning and Multimodal Reasoning

Multimodal large language models (MLLMs) are designed to process and generate content across various modalities, including text, images, audio, and video. These models aim to understand and integrate information from different sources, enabling applications such […]